Gre Is A Tunneling Protocol Designed To Apply To Which Segment Of An Ip-based Connection?

This manual covers the main aspects of TCP/IP and Ethernet in detail, including practical implementation, troubleshooting and maintenance.

Revision 6

Website: www.idc-online.com

E-mail: [email protected]

IDC Technologies Pty Ltd

PO Box 1093, West Perth, Western Australia 6872

Offices in Australia, New Zealand, Singapore, United Kingdom, Ireland, Malaysia, Poland, United States of America, Canada, South Africa and India

Copyright © IDC Technologies 2013. All rights reserved.

First published 2001

ISBN: 978-1-922062-07-9

All rights to this publication, associated software and workshop are reserved. No part of this publication may be reproduced, stored in a retrieval system or transmitted in any form or by any means electronic, mechanical, photocopying, recording or otherwise without the prior written permission of the publisher. All enquiries should be made to the publisher at the address above.

Disclaimer

Whilst all reasonable care has been taken to ensure that the descriptions, opinions, programs, listings, software and diagrams are accurate and workable, IDC Technologies do not accept any legal responsibility or liability to any person, organization or other entity for any direct loss, consequential loss or damage, however caused, that may be suffered as a result of the use of this publication or the associated workshop and software.

In case of any uncertainty, we recommend that you contact IDC Technologies for clarification or assistance.

Trademarks

All logos and trademarks belong to, and are copyrighted to, their companies respectively.

Acknowledgements

IDC Technologies expresses its sincere thanks to all those engineers and technicians on our training workshops who freely made available their expertise in preparing this manual.

Preface

One of the great protocols that has been inherited from the Internet is TCP/IP and this is being used as the open standard today for all network and communications systems. The reasons for this popularity are not hard to find. TCP/IP and Ethernet are truly open standards available to competing manufacturers and provide the user with a common standard for a variety of products from different vendors. In addition, the cost of TCP/IP and Ethernet is relatively low. Initially TCP/IP was used extensively in military applications and the purely commercial world such as banking, finance, and general business. But of great interest has been the strong movement to universal usage by the hitherto disinterested industrial and manufacturing spheres of activity who has traditionally used their own proprietary protocols and standards. These proprietary standards have been almost entirely replaced by the usage of the TCP/IP suite of protocols.

This is a hands-on book that has been structured to cover the main areas of TCP/IP and Ethernet in detail, while covering the practical implementation of TCP/IP in computer and industrial applications. Troubleshooting and maintenance of TCP/IP networks and communications systems in industrial environment will also be covered.

After reading this manual we would hope you would be able to:

- Understand the fundamentals of the TCP/IP suite of protocols

- Gain a practical understanding of the application of TCP/IP

- Learn how to construct a robust Local Area Network (LAN)

- Learn the basic skills in troubleshooting TCP/IP and LANs

- Apply the TCP/IP suite of protocols to both an office and industrial environment

Typical people who will find this book useful include:

- Network technicians

- Data communications managers

- Communication specialists

- IT support managers and personnel

- Network planners

- Programmers

- Design engineers

- Electrical engineers

- Instrumentation and control engineers

- System integrators

- System analysts

- Designers

- IT and MIS managers

- Network support staff

- Systems engineers

You should have a modicum of computer knowledge and know how to use the Microsoft Windows operating system in order to derive maximum benefit from this book.

The structure of the book is as follows.

Chapter 1: Introduction to Communications. This chapter gives a brief overview of what is covered in the book with an outline of the essentials of communications systems.

Chapter 2: Networking Fundamentals. An overview of network communication, types of networks, the OSI model, network topologies and media access methods.

Chapter 3: Half-duplex (CSMA/CD) Ethernet Networks. A description of the operation and performance of the older 10 Mbps Ethernet networks commencing with the basic principles.

Chapter 4: Fast and Gigabit Ethernet Systems. A minimum speed of 100 Mbps is becomingde rigeur on most Ethernet networks and this chapter examines the design and installation issues for Fast Ethernet and Gigabit Ethernet systems, which go well beyond the traditional 10 Mbps speed of operation.

Chapter 5: Introduction to TCP/IP. A brief review of the origins of TCP/IP to lay the foundation for the following chapters.

Chapter 6: Internet layer protocols. This chapter fleshes out the Internet Protocol (both IPv4 and IPv6) – perhaps the workhorses of the TCP/IP suite of protocols – and also examines the operation of ARP, RARP and ICMP.

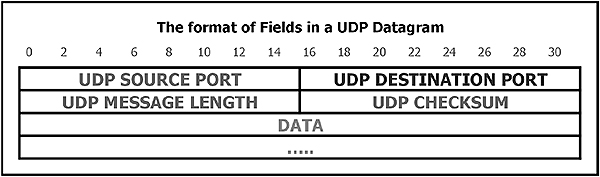

Chapter 7: Host-to-Host (Transport) layer protocols. The TCP (Transmission Control Protocol) and UDP (User Datagram Protocol) are both covered in this chapter.

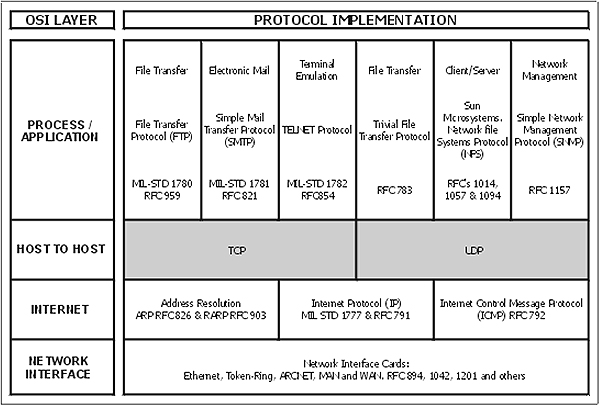

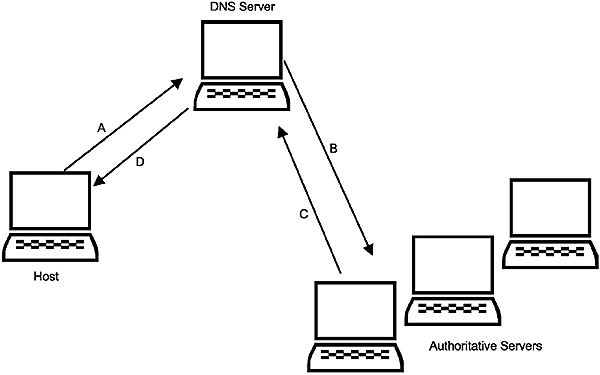

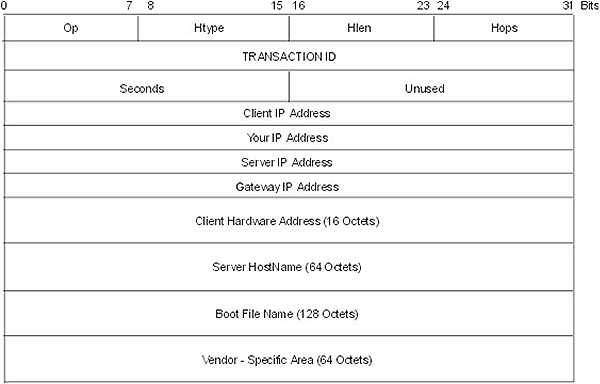

Chapter 8: Application layer protocols. A thorough coverage of the most important Application layer protocols such as FTP, TFTP, TELNET, DNS, WINS, SNMP, SMTP, POP, BOOTP and DHCP.

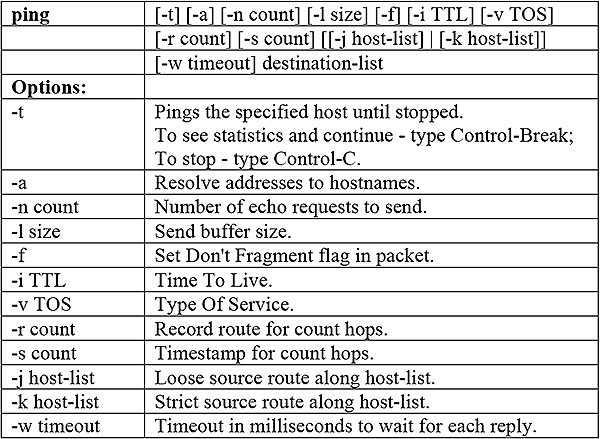

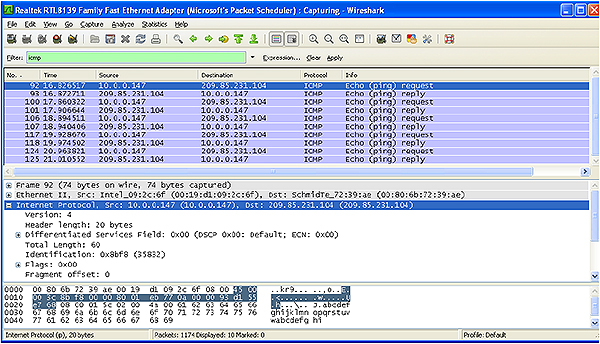

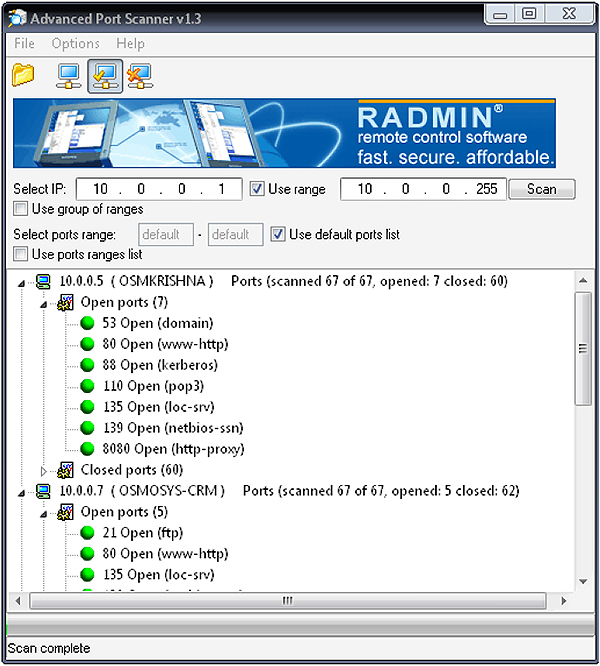

Chapter 9: TCP/IP utilities. A coverage focusing on the practical application of the main utilities such as PING, ARP, NETSTAT NBTSTAT, IPCONFIG, WINIPCFG, TRACERT, ROUTE and the HOSTS file.

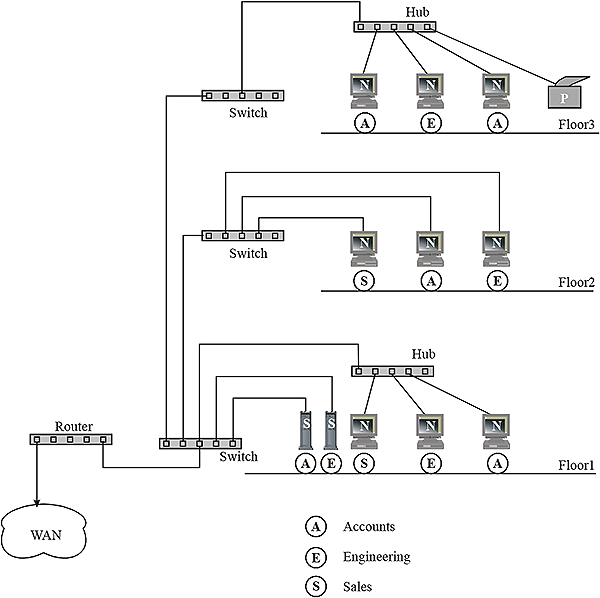

Chapter 10: LAN system components. A discussion on the key components for interconnecting networks such as repeaters, bridges, switches and routers.

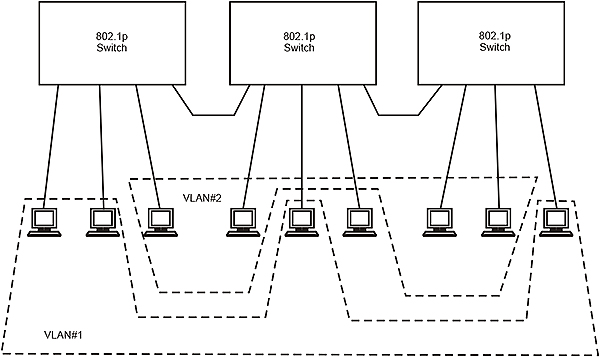

Chapter 11: VLANs. An overview of Virtual LANS; what they are used for, how they are set up, and how they operate.

Chapter 12: VPNs. This chapter discusses the rationale behind VPN deployment and takes a look at the various protocols and security mechanisms employed.

Chapter 13: The Internet for communication. The various TCP/IP protocols used for VoIP (Voice over IP) are discussed here, as well as the H.323 protocols and terminology.

Chapter 14: Security considerations. The security problem and methods of controlling access to a network will be examined in this chapter. This is a growing area of importance due to the proliferation attacks on computer networks by external parties.

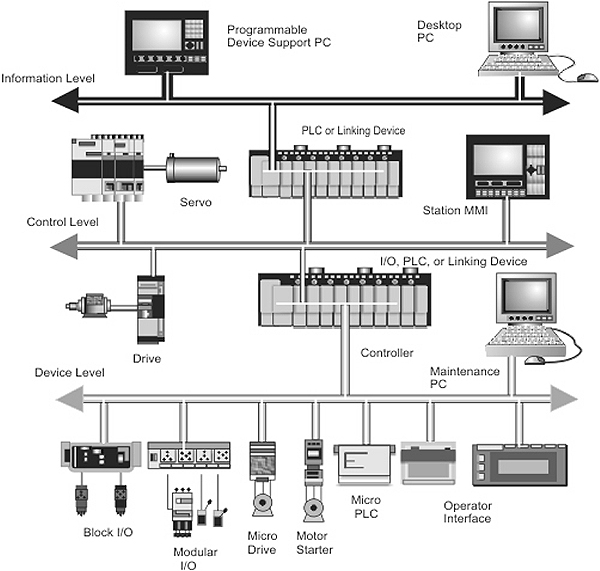

Chapter 15: Process automation. The legacy architectures and the factory of the future will be examined here together with an outline of the key elements of the modern Ethernet and TCP/IP architecture.

Chapter 16: Troubleshooting Ethernet. Various troubleshooting techniques as well as the required equipment used will be described here, focusing on the medium as well as layers 1 and 2 of the OSI model.

Chapter 17: Troubleshooting TCP/IP. This chapter covers the troubleshooting and maintenance of a TCP/IP network, focusing on layers 3 and 4 of the OSI model and dealing with the use of protocol analyzers.

Chapter 18: Satellites and TCP/IP. An overview of satellites and the problems/solutions associated with running TCP/IP over a satellite link.

Learning objectives

When you have completed study of this chapter you should:

- Understand the main elements of the data communication process

- Understand the difference between analog and digital transmission

- Explain how data transfer is affected by attenuation, bandwidth and noise in the channel

- Comprehend the importance of synchronization of digital data systems

- Describe the basic synchronization concepts used with asynchronous and synchronous systems

- Explain the following types of encoding:

- Manchester

- RZ

- NRZ

- MLT-3

- 4B/5B

- Describe the basic error detection principles.

1.1 Data communications

Communications systems transfer messages from one location to another. The information component of a message is usually known as data (derived from the Latin word for items of information). All data is made up of unique code symbols or other entities on which the sender and receiver of the messages have agreed. For example, binary data is represented by two states viz. '0' and '1'. These are referred to asbinary digits or 'bits' and are represented inside computers by the level of the electrical signals within storage elements; a high level could represent a '1', and a low-level could represent a '0'. Alternatively, the data may be represented by the presence or absence of light in an optical fiber cable.

1.2 Transmitters, receivers and communication channels

A communications process requires the following components:

- A source of the information

- A transmitter to convert the information into data signals compatible with the communications channel

- A communications channel

- A receiver to convert the data signals back into a form the destination can understand

- The destination of the information

This process is shown in Figure 1.1.

Communications process

The transmitter encodes the information into a suitable form to be transmitted over the communications channel. The communications channel moves this signal from the source to one or more destination receivers. The channel may convert this energy from one form to another, such as electrical to optical signals, whilst maintaining the integrity of the information so the recipient can understand the message sent by the transmitter.

For the communications to be successful the source and destination must use a mutually agreed method of conveying the data.

The main factors to be considered are:

- The form of signaling and the magnitude(s) of the signals to be used

- The type of communications link (twisted pair, coaxial, optic fiber, radio etc)

- The arrangement of signals to form character codes from which the message can be constructed

- The methods of controlling the flow of data

- The procedures for detecting and correcting errors in the transmission

The form of the physical connections is defined by interface standards. Some agreed-upon coding is applied to the message and the rules controlling the data flow and the detection and correction of errors are known as theprotocol.

1.2.1 Interface standards

An interface standard defines the electrical and mechanical aspects of the interface to allow the communications equipment from different manufacturers to interoperate.

A typical example is the TIA-232-F interface standard (commonly known as RS-232). This specifies the following three components:

- Electrical signal characteristics – defining the allowable voltage levels, grounding characteristics etc

- Mechanical characteristics – defining the connector arrangements and pin assignments

- Functional description of the interchange circuits – defining the function of the various data, timing and control signals used at the interface

It should be emphasized that the interface standard only defines the electrical and mechanical aspects of the interface between devices and does not define how data is transferred between them.

1.2.2 Coding

A wide variety of codes have been used for communications purposes. Early telegraph communications used Morse code with human operators as transmitter and receiver. The Baudot code introduced a constant 5-bit code length for use with mechanical telegraph transmitters and receivers. The commonly used codes for data communications today are the Extended Binary Coded Decimal Interchange Code (EBCIDIC) and the American Standard Code for Information Interchange (ASCII).

1.2.3 Protocols

A protocol is essential for defining the common message format and procedures for transferring data between all devices on the network. It includes the following important features:

- Initialization: Initializes the protocol parameters and commences the data transmission

- Framing and synchronization: Defines the start and end of the frame and how the receiver can synchronize to the data stream

- Flow control: Ensures that the receiver is able to advise the transmitter to regulate the data flow and ensure no data is lost.

- Line control: Used with half-duplex links to reverse the roles of transmitter and receiver and begin transmission in the other direction.

- Error control: Provides techniques to check the accuracy of the received data to identify transmission errors. These include block redundancy checks and cyclic redundancy checks

- Time out control: Procedures for transmitters to retry or abort transmission when acknowledgments are not received within agreed time limits

1.2.4 Some commonly used communications protocols

- X/ Y/ Z modem and Kermit for asynchronous file transmission

- Binary Synchronous Protocol (BSC), Synchronous Data Link Control (SDLC) or High-Level Data Link Control (HDLC) for synchronous transmissions

- Industrial protocols such as Modbus and DNP3

1.3 Types of communication channels

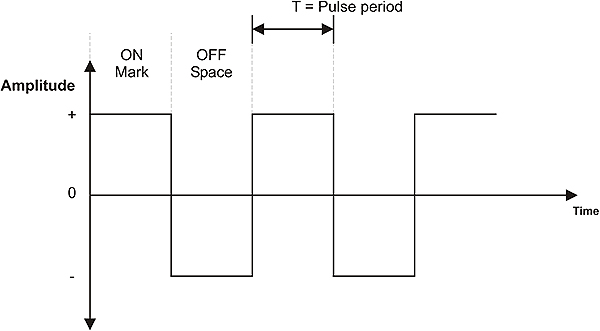

An analog communications channel conveys signals that change continuously in both frequency and amplitude. A typical example is a sine wave as illustrated in Figure 1.2. On the other hand, digital transmission employs a signal of which the amplitude varies between a few discrete states. An example is shown in Figure 1.3.

Analog signal

Digital signal

1.4 Communications channel properties

1.4.1 Signal attenuation

As the signal travels along a communications channel its amplitude decreases as the physical medium resists the flow of the signal energy. This effect is known as signal attenuation. With electrical signaling some materials such as copper are very efficient conductors of electrical energy. However, all conductors contain impurities that resist the movement of the electrons that constitute the electric current. The resistance of the conductors causes some of the electrical energy of the signal to be converted to heat as the signal progresses along the cable resulting in a continuous decrease in the electrical signal. The signal attenuation is measured in terms of signal loss per unit length of the cable, typically dB/km.

To allow for attenuation, a limit is set for the maximum length of the communications channel. This is to ensure that the attenuated signal arriving at the receiver is of sufficient amplitude to be reliably detected and correctly interpreted. If the channel is longer than this maximum specified length, repeaters must be used at intervals along the channel to restore the signal to acceptable levels.

Signal repeaters

Signal attenuation increases as the frequency increases. This causes distortion to practical signals containing a range of frequencies. This problem can be overcome by the use of amplifiers that amplify the higher frequencies by greater amounts.

1.4.2 Channel bandwidth

The quantity of information a channel can convey over a given period is determined by its ability to handle the rate of change of the signal, i.e. the signal frequency. The bandwidth of an analog channel is the difference between the highest and lowest frequencies that can be reliably transmitted over the channel. These frequencies are often defined as those at which the signal at the receiving end has fallen to half the power relative to the mid-band frequencies (referred to as the -3 dB points), in which case the bandwidth is known as the -3 dB bandwidth.

Channel bandwidth

Digital signals are made up of a large number of frequency components, but only those within the bandwidth of the channel will be able to be received. It follows that the larger the bandwidth of the channel, the higher the data transfer rate can be and more high frequency components of the digital signal can be transported, and so a more accurate reproduction of the transmitted signal can be received.

Effect of channel bandwidth on digital signal

The maximum data transfer rate (C) of the transmission channel can be determined from its bandwidth, by use of the following formula derived by Shannon.

C= 2B log2 M bps

Where:

B = bandwidth in hertz andM levels are used for each signaling element.

In the special case where only two levels, 'ON' and 'OFF' are used (binary),M = 2 andC = 2B. For example, the maximum data transfer rate for a PSTN channel with 3200 hertz bandwidth carrying a binary signal would be 2 × 3200 = 6400 bps. The achievable data transfer rate would then be reduced by half because of the Nyquist rate. It is further reduced in practical situations because of the presence of noise on the channel to approximately 2400 bps unless some modulation system is used.

1.4.3 Noise

As the signals pass through a communications channel the atomic particles and molecules in the transmission medium vibrate and emit random electromagnetic signals as noise. The strength of the transmitted signal is normally large relative to the noise signal. However, as the signal travels through the channel and is attenuated, its level can approach that of the noise. When the wanted signal is not significantly higher than the background noise, the receiver cannot separate the data from the noise and communication errors occur.

An important parameter of the channel is the ratio of the power of the received signal (S) to the power of the noise signal (N). The ratio S/N is called the Signal to Noise ratio, normally expressed in decibels (dB).

S/N = 10 log10 (S/N) dB

Where signal and noise levels are expressed in watts, or

S/N = 20 log10 (S/N) dB

Where signal and noise levels are expressed in volts

A high signal to noise ratio means that the wanted signal power is high compared to the noise level, resulting in good quality signal reception

The theoretical maximum data transfer rate for a practical channel can be calculated using the Shannon-Hartley law, which states that:

C = B log2(1+S/N) bps

Where:

C = data rate in bps

B = bandwidth of the channel in hertz

S = signal power in watts and

N = noise power in watts

It can be seen from this formula that increasing the bandwidth or increasing the S/N ratio will allow increases to the data rate, and that a relatively small increase in bandwidth is equivalent to a much greater increase in S/N ratio.

Digital transmission channels make use of higher bandwidths and digital repeaters or regenerators to regenerate the signals at regular intervals and maintain acceptable signal to noise ratios. The degraded signals received at the regenerator are detected, then retimed and retransmitted as nearly perfect replicas of the original digital signals, as shown in Figure 1.7. Provided the signal to noise ratios is maintained in each link, there is no accumulated noise on the signal, even when transmitted over thousands of kilometers.

Digital link

1.5 Data transmission modes

1.5.1 Direction of signal flow

Simplex

A simplex channel is unidirectional and allows data to flow in one direction only, as shown in Figure 1.8. Public radio broadcasting is an example of a simplex transmission. The radio station transmits the broadcast program, but does not receive any signals back from the receiver(s).

Simplex transmission

This has limited use for data transfer purposes, as we invariably require the flow of data in both directions to control the transfer process, acknowledge data etc.

Half-duplex

Half-duplex transmission allows simplex communication in both directions over a single channel, as shown in Figure 1.9. Here the transmitter at 'A' sends data to a receiver at 'B'. A line turnaround procedure takes place whenever transmission is required in the opposite direction. Transmitter 'B' is then enabled and communicates with receiver 'A'. The delay in the line turnaround reduces the available data throughput of the channel.

Half-duplex transmission

Full-duplex

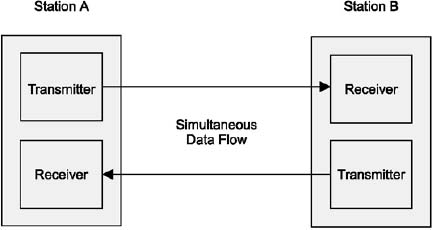

A full-duplex channel gives simultaneous communications in both directions, as shown in Figure 1.10.

Full-duplex transmission

1.5.2 Synchronization of digital data signals

Data communications depends on the timing of the signal transmission and reception being kept correct throughout the message transmission. The receiver needs to look at the incoming data at correct instants before determining whether a '1' or '0' was transmitted. The process of selecting and maintaining these sampling times is called synchronization.

In order to synchronize their transmissions, the transmitting and receiving devices need to agree on the length of the code elements to be used, known as the bit time. The receiver also needs to synchronize its clock with that of the sender in order to determine the right times at which to sample the data bits in the message. A device at the receiving end of a digital channel can synchronize itself using either asynchronous or synchronous means as outlined below.

1.5.3 Asynchronous transmission

Here the transmitter and receiver operate independently, and the receiver synchronizes its clock with that of the transmitter (only) at the start of each message frame. Transmissions are typically around one byte in length, but can also be longer. Often (but not necessarily) there is no fixed relationship between one message frame and the next, such as a computer keyboard input with potentially long random pauses between keystrokes.

Asynchronous data transmission

At the receiver the channel is monitored for a change in voltage level. The leading edge of the start bit is used to set a bistable multivibrator (flip-flop), and half a bit period later the output of the flip-flop is compared (ANDed) with the incoming signal. If the start bit is still present, i.e. the flip-flop was set by a genuine start bit and not by a random noise pulse, the bit clocking mechanism is set in motion. In fixed-rate systems this half-bit delay can be generated with a monostable (one-shot) multivibrator, but in variable-rate systems it is easier to feed a 4-bit (binary or BCD) counter with a frequency equal to 16 times the data frequency and observing the 'D' (most significant bit) output, which changes from 0 to 1 at a count of eight, half-way through the bit period.

Clock synchronization at the receiver

The input signal is fed into a serial-in parallel-out shift register. The data bits are then captured by clocking the shift register at the data rate, in the middle of each bit. For an eight-bit serial transmission (data plus parity), this sampling is repeated for each of the eight data bits and a final sample is made during the ninth time interval to identify the stop bit and to confirm that the synchronization has been maintained to the end of the frame. Figure 1.13 illustrates the asynchronous data reception process.

Asynchronous data reception (clock too slow)

The receiver here is initially synchronized with the transmitter, and then maintains this synchronization throughout the continuous transmission of multiple bytes. This is achieved by special data coding schemes, such as Manchester, which ensure that the transmitted clock is encoded into the transmitted data stream. This enables the synchronization to be maintained at the receiver right to the last bit of the message, and thus allows larger frames of data (up to several thousand bytes) to be efficiently transferred at high data rates.

The synchronous system packs many bytes together and sends them as a continuous stream, called a frame. Each frame consists of a header, data field and checksum. Clock synchronization is provided by a predefined sequence of bits called a preamble.

In some cases the preamble is terminated with a 'Start of Frame Delimiter' or SFD. Some systems may append a post-amble if the receiver is not otherwise able to detect the end of the message. An example of a synchronous frame is shown in Figure 1.14. Understandably all high-speed data transfer systems utilize synchronous transmission systems to achieve fast, accurate transfers of large amounts of data.

Synchronous frame

1.6 Encoding methods

1.6.1 Manchester

Manchester is a bi-phase signal-encoding scheme, and is used in the older 10 Mbps Ethernet LANs. The direction of the transition in mid-interval (negative to positive or positive to negative) indicates the value ('1' or '0', respectively) and provides the clocking.

The Manchester codes have the advantage that they are self-clocking. Even a sequence of one thousand '0s' will have a transition in every bit; hence the receiver will not lose synchronization. The price paid for this is a bandwidth requirement double that which is required by the RZ-type methods.

The Manchester scheme follows these rules:

- +V and –V voltage levels are used

- There is a transition from one to the other voltage level halfway through each bit interval

- There may or may not be a transition at the start of each bit interval, depending on whether the bit value is a '0' or '1'

- For a '1' bit, the transition is always from a –V to +V; for a '0' bit, the transition is always from a +V to a –V

In Manchester encoding, the beginning of a bit interval is used merely to set the stage. The activity in the middle of each bit interval determines the bit value: upward transition for a '1' bit, downward for a '0' bit.

1.6.2 Differential Manchester

Differential Manchester is a bi-phase signal-encoding scheme used in Token Ring LANs. The presence or absence of a transition at the beginning of a bit interval indicates the value; the transition in mid-interval just provides the clocking.

For electrical signals, bit values will generally be represented by one of three possible voltage levels: positive (+V), zero (0 V), or negative (–V). Any two of these levels are needed – for example, + V and –V.

There is a transition in the middle of each bit interval. This makes the encoding method self-clocking, and helps avoid signal distortion due to DC signal components.

For one of the possible bit values but not the other, there will be a transition at the start of any given bit interval. For example, in a particular implementation, there may be a signal transition for a '1' bit.

In differential Manchester encoding, the presence or absence of a transition at the beginning of the bit interval determines the bit value. In effect, '1' bits produce vertical signal patterns; '0' bits produce horizontal patterns. The transition in the middle of the interval is just for timing.

1.6.3 RZ (return to zero)

The RZ-type codes consume only half the bandwidth taken up by the Manchester codes. However, they are not self-clocking since a sequence of a thousand '0s' will result in no movement on the transmission medium at all.

RZ is a bipolar signal-encoding scheme that uses transition coding to return the signal to a zero voltage during part of each bit interval. It is self-clocking.

In the differential version, the defining voltage (the voltage associated with the first half of the bit interval) changes for each '1' bit, and remains unchanged for each '0' bit.

In the non-differential version, the defining voltage changes only when the bit value changes, so that the same defining voltages are always associated with '0' and '1'. For example, +5 volts may define a '1', and –5 volts may define a '0'.

1.6.4 NRZ (non-return to zero)

NRZ is a bipolar encoding scheme. In the non-differential version it associates, for example, +5 V with '1' and –5 V with '0'.

In the differential version, it changes voltages between bit intervals for '1' values but not for '0' values. This means that the encoding changes during a transmission. For example, '0' may be a positive voltage during one part and a negative voltage during another part, depending on the last occurrence of a '1'. The presence or absence of a transition indicates a bit value, not the voltage level.

1.6.5 MLT-3

MLT-3 is a three-level encoding scheme that can also scramble data. This scheme is one proposed for use in FDDI networks. The MLT-3 signal-encoding scheme uses three voltage levels (including a zero level) and changes levels only when a '1' occurs.

It follows these rules:

- +V, 0 V, and –V voltage levels are used

- The voltage remains the same during an entire bit interval; that is, there are no transitions in the middle of a bit interval

- The voltage level changes in succession; from +V to 0 V to –V to 0 V to +V, and so on

- The voltage level changes only for a '1' bit

MLT-3 is not self-clocking, so that a synchronization sequence is needed to make sure the sender and receiver are using the same timing.

1.6.6 4B/5B

The Manchester codes, as used for 10 Mbps Ethernet, are self-clocking but consume unnecessary bandwidth (at 10 Mbps it introduces a 20 MHz frequency component on the medium). For this reason it is not possible to use it for 100 Mbps Ethernet, even over Cat5 cable. A solution is to revert back to one of the more bandwidth efficient methods such as NRZ or RZ. The problem with these, however, is that they are not self-clocking and hence the receiver loses synchronization if several zeros are transmitted sequentially. This problem, in turn, is overcome by using the 4B/5B technique.

The 4B/5B technique codes each group of four bits into a five-bit code. For example, the binary pattern 0110 is coded into the five-bit pattern 01110. This code table has been designed in such a way that no combination of data can ever be encoded with more than 3 zeros on a row. This allows the carriage of 100 Mbps data by transmitting at 125 MHz, as opposed to the 200 Mbps required by Manchester encoding.

Table 1.1

Table 1.1 4B/5B data coding

1.7 Error detection

All practical data communications channels are subject to noise, particularly where equipment is situated in industrial environments with high electrical noise, such as electromagnetic radiation from adjacent equipment or electromagnetic induction from adjacent cables. As a consequence the received data may contain errors. To ensure reliable data communication we need to check the accuracy of each message.

Asynchronous systems often use a single bit checksum, the parity bit, for each message, calculated from the seven or eight data bits in the message. Longer messages require more complex checksum calculations to be effective. For example the Longitudinal Redundancy Check (LRC) calculates an additional byte covering the content of the message (up to 15 bytes) while a two-byte arithmetic checksum can be used for messages up to 50 bytes in length. Most high-speed LANs use a 32-bit Cyclic Redundancy Check (CRC)

The CRC method detects errors with very high degree of accuracy in messages of any length and can, for example, detect the presence of a single bit error in a frame containing tens of thousands of bits of data. The CRC treats all the bits of the message block as one binary number that is then divided by a known polynomial. For a 32-bit CRC this is a specific 32-bit number, specially chosen to detect very high percentages of errors, including all error sequences of less than 32 bits. The remainder found after this division process is the CRC. Calculation of the CRC is carried out by the hardware in the transmission interface of LAN adapter cards.

Learning objectives

When you have completed study of this chapter you should be able to:

- Explain the difference between circuit switching and packet switching

- Explain the difference between connectionless and connection oriented communication

- Explain the difference between a datagram service and a virtual circuit

- List the differences between LANs, MANs and WANs

- Describe the concept of layered communications model

- Describe the functions of each layer in the OSI reference model

- Indicate the structure and relevance of the IEEE 802 Standards and Working Groups

- Identify hub, ring and bus topologies – from a physical as well as from a logical point of view

- Describe the basic mechanisms involved in contention, token passing and polling media access control methods

2.1 Overview

Linking computers and other devices together to share information is nothing new. The technology for Local Area Networks (LANs) was developed in the 1970s by minicomputer manufacturers to link widely separated user terminals to computers. This allowed the sharing of expensive peripheral equipment as well as data that may have previously existed in only one physical location.

A LAN is a communications path between two or more computers, file servers, terminals, workstations and various other intelligent peripheral equipment, generally referred to as devices or hosts. A LAN allows access to devices to be shared by several users, with full connectivity between all stations on the network. It is usually owned and administered by a private owner and is located within a localized group of buildings.

The connection of a device such as a PC or printer to a LAN is generally made through a Network Interface Card or NIC. The networked device is then referred to as a node. Each node is allocated a unique address and every message sent on the LAN to a specific node must contain the address of that node in its header. All nodes continuously watch for any messages sent to their own addresses on the network. LANs operate at relatively high speeds (Mbps range and upwards) with a shared transmission medium over a fairly small geographical (i.e. local) area.

On a LAN the software controlling the transfer of messages among the devices on the network must deal with the problem of sharing the common resources of the network without conflict or corruption of data. Since many users can access the network at the same time, some rules must be established by which devices can access the network, when, and under what conditions. These rules are covered under the general heading of Media Access Control.

When a node has access to the channel to transmit data, it sends the data within a packet (or frame) that generally includes, in its header, the addresses of both the source and the destination nodes. This allows each node to either receive or ignore data on the network.

2.2 Network communication

There are two basic types of communications processes for transferring data across networks, viz. circuit switching and packet switching. These are illustrated in Figure 2.1

Circuit switched and packet switched data

2.2.1 Circuit switching

In a circuit switched process a continuous connection is made across the network between the two different points. This is a temporary connection that remains in place as long as both parties wish to communicate, i.e. until the connection is terminated. All the network resources are available for the exclusive use of these two parties whether they are sending data or not. When the connection is terminated the network resources are released for other users. A call in an older (non-digital) telephone system is an example of a circuit switched connection.

The advantage of circuit switching is that the users have an exclusive channel available for the transfer of their data at any time while the connection is made. The obvious disadvantage is the cost of maintaining the connection when there is little or no data being transferred. Such connections can be very inefficient for the bursts of data that are typical of many computer applications.

2.2.2 Packet switching

Packet switching systems improve the efficiency of the transfer of bursts of data, by sharing the one communications channel with other similar users. This is analogous to the efficiencies of the mail system as discussed in the following paragraph.

When you send a letter by mail you post the stamped, addressed envelope containing the letter in your local mailbox. At regular intervals the mail company collects all the letters from your locality and takes them to a central sorting facility where the letters are sorted in accordance with the addresses of their destinations. All the letters for each destination are sent off in common mailbags to those locations, and are subsequently delivered in accordance with their addresses. Here we have economies of scale where many letters are carried at one time and are delivered by the one visit to your street/locality. Efficiency is more important than speed, and some delay is normal – within acceptable limits.

Packet switched messages are broken into a series of packets of certain maximum size, each containing the destination and source addresses and a packet sequence number. The packets are sent over a common communications channel, interleaved with those of other users. The 'switches' (routers) in the system forward the messages based on their destination address. Messages sent in multiple packets are reassembled in the correct order by the destination node.

All packets do not necessarily follow the same path. As they travel through the network they may get separated and handled independently from each other, but eventually arrive at their correct destination albeit out of their transmitted sequence. Some packets may even be held up temporarily (stored) at a node, due to unavailable lines or technical problems that might arise on the network. When the time is right, the node then allows the packet to pass or be 'forwarded'.

The Internet is an example of a global packet switching network.

2.2.3 Datagrams and virtual circuits

Packet switched services generally support two types of services viz. datagram services and virtual circuits. In a self-contained LAN all packets will eventually reach their destination. However, if packets are to be switched ACROSS networks i.e. on an internetwork such as a Wide Area Network (WAN), then a routing decision must be made.

There are two possible approaches. The first is referred to as a 'datagram' service. The destination address incorporated in the data header will allow the routing to be performed. There is no guarantee when any packet will arrive at its destination, and sequential packets may well arrive out of sequence. The principle is similar to the mail service. You may send four postcards from your holiday in the South of France, but there is no guarantee that they will arrive in the same order that you posted them. If the recipient does not have a telephone, there is no easy method of determining that they have, in fact, been delivered.

Such a service is called an 'unreliable' service. The term 'unreliable' is here not used in its everyday context, but instead refers to the fact that there is no mechanism for informing the sender whether the packet had been delivered or not. The service is also called 'connectionless' since there is no logical connection between sender and recipient.

The second approach is to set up a logical connection between transmitter and receiver, and to send packets of data along this connection or 'virtual circuit'. Whilst this might seem to be in conflict with the earlier statements on circuit switching, it should be quite clear that this does NOT imply a permanent physical circuit being dedicated to the one packet stream of data. Instead, the circuit shares its capacity with other traffic. The important point to note is that the route for the data packets to follow is taken up-front when all the routing decisions are taken. The data packets just follow that pre-established route. This service is known as 'reliable' and is also referred to as a connection oriented service.

2.3 Types of networks

2.3.1 LANs

LANs are characterized by high-speed transmission over a restricted geographical area. Gigabit Ethernet (1000BaseX), for example, operates at 1000 Mbps. The restriction on size, though, is a deployment and cost issue and not a technical one, as current Ethernet technology allows switches to be interconnected with fiber into networks covering distances of thousands of kilometers.

Example of a LAN

2.3.2 WANs

While LANs operate where distances are relatively small, Wide Area Networks (WANs) are used to link LANs that are separated by large distances that range from a few tens of meters to thousands of kilometers. WANs normally use the public telecommunication system to provide cost-effective connection between LANs. Since these links are supplied by independent telecommunications utilities, they are commonly referred to (and illustrated as) a 'communications cloud'. Routers perform this type of activity. They store the message at LAN speed and re-transmit it across the communications cloud at a different speed. When the entire message has been received at the access router of the remote LAN, it is once again forwarded at LAN speed. A typical speed at which a WAN interconnects varies between 9600 bps for a leased line to 40 Gbps for SDH/SONET. This concept is shown in Figure 2.3.

Example of a WAN

If reliability is needed for a time critical application, WANs can be considered quite unreliable, as delay in the information transmission is varied and wide. For this reason, WANs can only be used if the necessary error detection/correction software is in place, and if propagation delays can be tolerated within certain limits.

2.3.3 MANs

An intermediate type of network – a Metropolitan Area Network (MAN) – operates typically at 100Mbps. MANs use fiber optic technology to communicate over distances of up to several hundred kilometers. They are normally used by telecommunication service providers or utilities within or around cities. The distinction between MANs and LANs is, however, becoming rather academic since current Ethernet LAN technology (100 Mbps switches with 120 km fiber links) are also used to implement MANs.

2.3.4 Coupling ratio

The coupling ratio provides an academic yardstick for comparing the performance of these different kinds of networks. It is useful to give us an insight into the way these networks operate.

Coupling ratio α = τ /T

Where:

τ Propagation delay for packet

T Average packet transmission time

α = <<1 indicates a LAN

α = 1 indicates a MAN

α = >>1 indicates a WAN

This is illustrated in the following examples and Figure 2.4.

- 200 m LAN: With a propagation delay of about 1 mS, a 1000 byte packet takes about 0.8 ms to transmit at 10 Mbps. Therefore α is about 1 mS/0.8 ms or 1/800 which is very much less than 1. This means that for a LAN the packet quickly reaches the destination and the transmission of the packet then takes, say, hundreds of times longer to complete.

- 200 km MAN: With a propagation delay of about 1 mS, a 4000 byte packet takes about 0.4 ms to transmit at 100 Mbps. Therefore α is about 1 mS/0.4 ms or 2.4 which is reasonably close to 1. This means that for a MAN the packet reaches the destination and then may only take about the same time again to complete the transmission.

- 100 000 km WAN: With a propagation delay of about 0.5–2 seconds, a packet of 128 bytes takes about 10 ms to transmit at 1 Mbps. Therefore α is about 1 S/10 ms or 100. This means that for a WAN the packet reaches the destination after a delay of 100 times the packet length.

Coupling ratios

2.3.5 VPNs

A cheaper alternative to a WAN, which uses dedicated packet switched links (such as X.25) to interconnect two or more LANs, is the Virtual Private Network (VPN), which interconnects LANs by utilizing the existing Internet infrastructure.

A potential problem is the fact that the traffic between the networks shares all the other Internet traffic and hence all communications between the LANs are visible to the outside world. This problem is solved by utilizing encryption techniques to make all communications between the LANs transparent (i.e. illegible) to other Internet users.

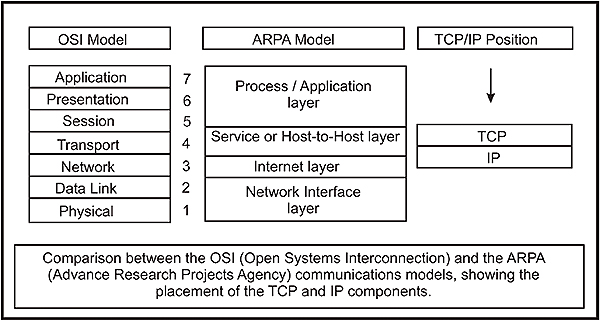

2.4 The OSI model

A communication framework that has had a tremendous impact on the design of LANs is the Open Systems Interconnection (OSI) model. The objective of this model is to provide a framework for the coordination of standards development and allows both existing and evolving standards activities to be set within that common framework.

2.4.1 Open and closed systems

The wiring together of two or more devices with digital communication is the first step towards establishing a network. In addition to the hardware requirements as discussed above, the software problems of communication must also be overcome. Where all the devices on a network are from the same manufacturer, the hardware and software problems are usually easily overcome because all the system components have usually been designed within the same guidelines and specifications.

When devices from several manufacturers are used on the same application, the problems seem to multiply. Networks that are specific to one manufacturer and work with proprietary hardware connections and protocols are called closed systems. Usually these systems were developed at a time before standardization became popular, or when it was considered unlikely that equipment from other manufacturers would be included in the network.

In contrast, 'open' systems conform to specifications and guidelines that are 'open' to all. This allows equipment from any manufacturer that complies with that standard to be used interchangeably on the network. The benefits of open systems include wider availability of equipment, lower prices and easier integration with other components.

2.4.2 The OSI concept

Faced with the proliferation of closed network systems, the International Organization for Standardization (ISO) defined a 'Reference Model for Communication between Open Systems' (ISO 7498) in 1978. This has since become known as the OSI model. The OSI model is essentially a data communications management structure, which breaks data communications down into a manageable hierarchy ('stack') of seven layers. Each layer has a defined purpose and interfaces with the layers above it and below it. By laying down functions and services for each layer, some flexibility is allowed so that the system designers can develop protocols for each layer independently of each other. By conforming to the OSI standards, a system is able to communicate with any other compliant system, anywhere in the world.

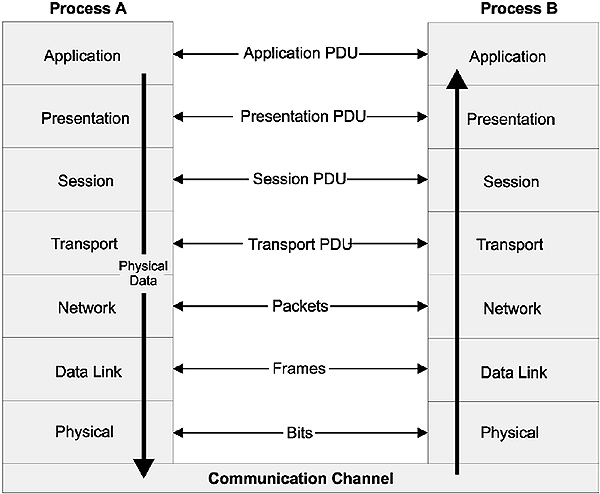

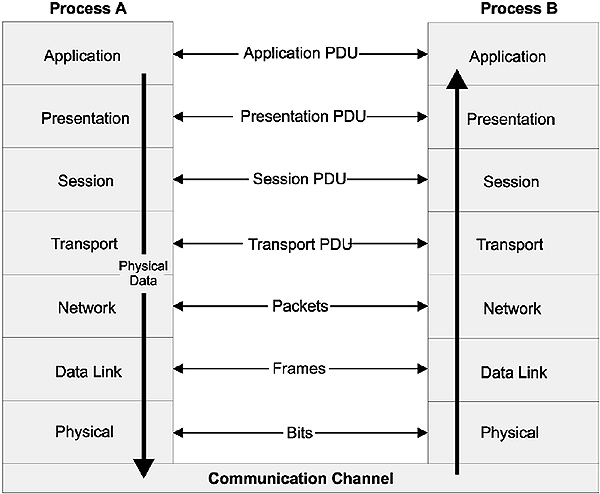

The OSI model supports a client/server model and since there must be at least two nodes to communicate, each layer also appears to converse with its peer layer at the other end of the communication channel in a virtual ('logical') communication. The concept of isolation of the process of each layer, together with standardized interfaces and peer-to-peer virtual communication, are fundamental to the concepts developed in a layered model such as the OSI model. This concept is shown in Figure 2.5.

The OSI layering concept

The actual functions within each layer are provided by entities (abstract devices such as programs, functions, or protocols) that implement the services for a particular layer on a single machine. A layer may have more than one entity – for example a protocol entity and a management entity. Entities in adjacent layers interact through the common upper and lower boundaries by passing physical information through Service Access Points (SAPs). A SAP could be compared to a predefined 'postbox' where one layer would collect data from the previous layer. The relationship between layers, entities, functions and SAPs is shown in Figure 2.6.

Relationship between layers, entities, functions and SAPs

In the OSI model, the entity in the next higher layer is referred to as the N+1 entity and the entity in the next lower layer as N–1. The services available to the higher layers are the result of the services provided by all the lower layers.

The functions and capabilities expected at each layer are specified in the model. However, the model does not prescribe how this functionality should be implemented. The focus in the model is on the 'interconnection' and on the information that can be passed over this connection. The OSI model does not concern itself with the internal operations of the systems involved.

When the OSI model was being developed, a number of principles were used to determine exactly how many layers this communication model should encompass. These principles are:

- A layer should be created where a different level of abstraction is required

- Each layer should perform a well-defined function

- The function of each layer should be chosen with thought given to defining internationally standardized protocols

- The layer boundaries should be chosen to minimize the information flow across the boundaries

- The number of layers should be large enough that distinct functions need not be thrown together in the same layer out of necessity and small enough that the architecture does not become unwieldy

The use of these principles led to seven layers being defined, each of which has been given a name in accordance with its process purpose. The diagram below shows the seven layers of the OSI model.

The OSI reference model

The service provided by any layer is expressed in the form of a service primitive with the data to be transferred as a parameter. A service primitive is a fundamental service request made between protocols. For example, layer W may sit on top of layer X. If W wishes to invoke a service from X, it may issue a service primitive in the form ofX.Connect.request to X. An example of a service primitive is shown in Figure 2.8. Service primitives are normally used to transfer data between processes within a node.

Service primitive

Typically, each layer in the transmitting site, with the exception of the lowest, adds header information, or Protocol Control Information (PCI), to the data before passing it through the interface between adjacent layers. This interface defines which primitive operations and services the lower layer offers to the upper one. The headers are used to establish the peer-to-peer sessions across the sites and some layer implementations use the headers to invoke functions and services at the N+1 or N–1 adjacent layers.

At the transmitter, the user application (e.g. the client) invokes the system by passing data, primitive names and control information to the highest layer of the protocol stack. The stack then passes the data down through the seven layers, adding headers (and possibly trailers), and invoking functions in accordance with the rules of the protocol at each layer. At each level, this combined data and header is called a Protocol Data Unit or PDU. At the receiving site, the opposite occurs with the headers being stripped from the data as it is passed up through the layers. These header and control messages invoke services and a peer-to-peer logical interaction of entities across the sites. Generally speaking, layers in the same stack communicate with parameters passed through primitives, and peer layers communicate with the use of the PCI (headers) across the network.

At this stage it should be quite clear that there is no physical connection or direct communication between the peer layers of the communicating applications. Instead, all physical communication is across the Physical, or lowest layer of the stack. Communication is down through the protocol stack on the transmitting node and up through the stack on the receiving node. Figure 2.9 shows the full architecture of the OSI model, whilst Figure 2.10 shows the effects of the addition of headers to the respective PDUs at each layer. The net effect of this extra information is to reduce the overall bandwidth of the communications channel, since some of the available bandwidth is used to pass control information.

Full architecture of the OSI model

OSI message passing

2.4.3 OSI layer services

Briefly, the services provided at each layer of the stack are:

- Application

Provision of network services to the user's application programs such as clients and servers. Note that the actual application programs do NOT reside here - Presentation

Maps the data representations into an external data format that will enable correct interpretation of the information on receipt. The mapping can also possibly include encryption and/or compression of data - Session

Control of the communications sessions between the users. This includes the grouping together of messages and the co-ordination of data transfer between grouped layers. It also inserts checkpoints for (transparent) recovery of aborted sessions - Transport

The management of the communications between the two end systems - Network

Responsible for the remote delivery of data packets. Functions include routing of data, network addressing, fragmentation of large packets, congestion and flow control. - Data Link

Responsible for sending a frame of data from one system to another. Attempts to ensure that errors in the received bit stream are not passed up into the rest of the protocol stack. Error correction and detection techniques are used here - Physical

Defines the electrical and mechanical connections at the physical level, or the communication channel itself. Functional responsibilities include modulation, multiplexing and signal generation.

The medium itself is not part of the Physical layer, although the Physical layer defines which medium is to be used. A more specific discussion of each layer is now presented.

2.4.4 Application layer

The Application layer is the topmost layer in the OSI reference model. This layer is responsible for giving applications access to the network. Examples of application-layer tasks include file transfer, electronic mail (e-mail) services, and network management. Application-layer services are much more varied than the services in lower layers, because the entire range of application and task possibilities is available here. The specific details depend on the framework or model being used. For example, there are several network management applications. Each of these provides services and functions specified in a different framework for network management. Programs can get access to the application-layer services through Application Service Elements (ASEs). There are a variety of such application service elements; each designed for a class of tasks. To accomplish its tasks, the application layer passes program requests and data to the presentation layer, which is responsible for encoding the Application layer's data in the appropriate form.

2.4.5 Presentation layer

The Presentation layer is responsible for presenting information in a manner suitable for the applications or users dealing with the information. Functions such as data conversion from EBCDIC to ASCII (orvice versa), the use of special graphics or character sets, data compression or expansion, and data encryption or decryption are carried out at this layer. The Presentation layer provides services for the Application layer above it, and uses the Session layer below it. In practice, the presentation layer rarely appears in pure form, and it is the least well defined of the OSI layers. Application- or Session-layer programs will often encompass some or all of the Presentation layer functions.

2.4.6 Session layer

The Session layer is responsible for synchronizing and sequencing the dialog and packets in a network connection. This layer is also responsible for ensuring that the connection is maintained until the transmission is complete and that the appropriate security measures are taken during a 'session' (i.e., a connection). The Session layer is used by the Presentation layer above it, and uses the Transport layer below it.

Transport layer

In the OSI reference model, the Transport layer is responsible for providing data transfer at an agreed-upon level of quality, such as at specified transmission speeds and error rates. To ensure delivery, some Transport layer protocols assign sequence numbers to outgoing packets. The Transport layer at the receiving end checks the packet numbers to make sure all have been delivered and to put the packet contents into the proper sequence for the recipient. The Transport layer provides services for the session layer above it, and uses the Network layer below it to find a route between source and destination. The Transport layer is crucial in many ways, because it sits between the upper layers (which are strongly application-dependent) and the lower ones (which are network-based).

The layers below the Transport layer are collectively known as the 'subnet' layers. Depending on how well (or not) they perform their functions, the Transport layer has to interfere less (or more) in order to maintain a reliable connection.

2.4.7 Network layer

The Network layer is the third layer from the bottom up, or the uppermost 'subnet layer'. It is responsible for the following tasks:

- Determining addresses or translating from hardware to network addresses. These addresses may be on a local network or they may refer to networks located elsewhere on an internetwork. One of the functions of the network layer is, in fact, to provide capabilities needed to communicate on an internetwork

- Finding a route between a source and a destination node or between two intermediate devices

- Fragmentation of large packets of data into frames small enough to be transmitted by the underlying Data Link layer through a process called fragmentation. The corresponding Network layer at the receiving node undertakes reassembly of the packet

2.4.8 Data Link layer

The Data Link layer is responsible for creating, transmitting, and receiving data packets. It provides services for the various protocols at the Network layer, and uses the Physical layer to transmit or receive material. The Data Link layer creates packets appropriate for the network architecture being used. Requests and data from the Network layer are part of the data in these packets (or frames, as they are often called at this layer). These packets are passed down to the Physical layer and from there they are transmitted to the Physical layer on the destination host via the medium. Network architectures (such as Ethernet, ARCnet, Token Ring, and FDDI) encompass the Data Link and Physical layers.

The IEEE 802 networking working groups have refined the Data Link layer into two sub-layers viz, the Logical Link Control (LLC) sub-layer at the top and the Media Access Control (MAC) sub-layer at the bottom. The LLC sub-layer provides an interface for the Network layer protocols, and control the logical communication with its peer at the receiving side. The MAC sub-layer controls physical access to the medium.

2.4.9 Physical layer

The Physical layer is the lowest layer in the OSI reference model. This layer gets data packets from the Data Link layer above it, and converts the contents of these packets into a series of electrical signals that represent '0' and '1' values in a digital transmission. These signals are sent across a transmission medium to the Physical layer at the receiving end. At the destination, the physical layer converts the electrical signals into a series of bit values. These values are grouped into packets and passed up to the Data Link layer.

The required mechanical and electrical properties of the transmission medium are defined at this level. These include:

- The type of cable and connectors used. Cable may be coaxial, twisted-pair, or fiber optic. The types of connectors depend on the type of cable

- The pin assignments for the cable and connectors. Pin assignments depend on the type of cable and also on the network architecture being used

- The format for the electrical signals. The encoding scheme used to signal '0' and '1' values in a digital transmission or particular values in an analog transmission depend on the network architecture being used

The medium itself is, however, not specific here. For example, Fast Ethernet dictates that Cat5 cable should be used, but the cable itself is specified in TIA/EIA-568B.

2.5 Interoperability and internetworking

Interoperability is the ability of network users to transfer information between different communications systems; irrespective of the way those systems are supported. One definition of interoperability is:

'The capability of using similar devices from different manufacturers as effective replacements for each other without losing functionality or sacrificing the degree of integration with the host system. In other words, it is the capability of software and hardware systems on different devices to communicate together. This results in the user being able to choose the right devices for an application independent of the supplier, control system and the protocol.'

Internetworking is a term that is used to describe the interconnection of differing networks so that they retain their own status as a network. What is important in these concepts is that internetworking devices be made available so that the exclusivity of each of the linked networks is retained, but that the ability to share information, and physical resources if necessary, becomes both seamless and transparent to the end user.

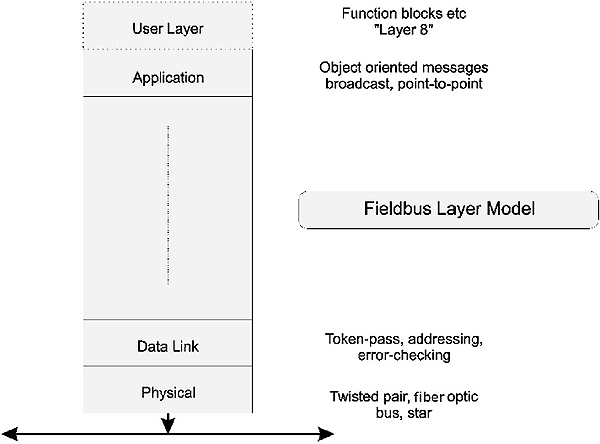

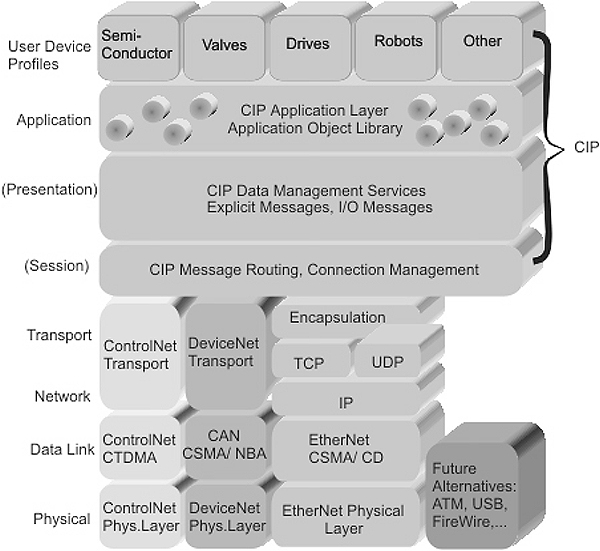

At the plant floor level the requirement for all seven layers of the OSI model is often not required or appropriate. Hence a simplified OSI model is often preferred for industrial applications where time critical communications is more important than full communications functionality provided by the full seven layer model. Such a protocol stack is acceptable since there will be no internetworking at this level. A well-known stack is that of the S-50 Fieldbus standard, which is shown in Figure 2.11.

Generally most industrial protocols are written around the Data Link layer (to send/receive frames) and the Application layer (to deal with clients and servers). The Physical layer is required for access to the bus.

When the reduced OSI model is implemented the following limitations exist:

- The maximum size of the application messages is limited by the maximum size allowed on the channel, as there is no Network layer to fragment large packets

- No routing of messages is possible between different networks, as there is no Network layer

- Only half-duplex communications is possible, as there is no Session layer

- Message formats must be the same for all nodes (as there is no Presentation layer)

One of the challenges with the use of the OSI model is the concept of interoperability and the need for definition of another layer above the Application layer, called the 'User' layer. The user layer is not formally part of the OSI model but is found in systems such as DeviceNet, ProfiBus and Foundation Fieldbus to accommodate issues such as device profiles and software building blocks ('function blocks') for control.

ReducedOSI stack

Most modern field buses no longer adhere to the 3 layer concept, and implement a full stack (including TCP/IP) in order to provide routing and hence allow large-scale deployment. Examples are ProfiNet, IDA and Ethernet/IP.

From the point of view of internetworking, TCP/IP operates as a set of programs that interacts at the transport and network layer levels without needing to know the details of the technologies used in the underlying layers. As a consequence this has developed as ade facto industrial internetworking standard. Many manufacturers of proprietary equipment are using TCP/IP to facilitate internetworking.

2.6 Protocols and protocol standards

A protocol has already been defined as the rules for exchanging data in a manner that is understandable to both the transmitter and the receiver. There must be a formal and agreed set of rules if the communication is to be successful. The rules generally relate to such responsibilities as error detection and correction methods, flow control methods, and voltage and current standards. However, there are other properties such as the size of the data packet that are important in the protocols that are used on LANs.

Another important responsibility is the method of routing the packet, once it has been assembled. In a self-contained LAN i.e. intranetwork, this is not a problem since all packets will eventually reach their destination by virtue of design. However, if the packet is to be switched across networks i.e. on an internetwork – such as a WAN – then a routing decision must be made. In this regard we have already examined the use of a datagram servicevis à vis a virtual circuit.

In summary, there are many different types of protocols, but they can be classified in terms of their functional emphasis. One scheme of classification is:

- Master/slave vs peer-to-peer

A master/slave relationship requires that one of the communicators act as a master controller. Peer-to-peer protocols allow all communications to take place as and when required - Connection oriented

A connection-oriented protocol first establishes a logical connection with its counterpart before transmitting data. A connectionless protocol just sends the data regardless. - Asynchronous vs synchronous

Synchronous protocols send data in frames at the clock rate of the network. Asynchronous protocols send data one byte at a time, with a varying delay between each byte - Layered vs monolithic

The OSI model illustrates a layered approach to protocols. The monolithic approach uses a single layer to provide all functionality - Heavy vs light

A heavy protocol has a wide range of functions built in, and consequently incurs a high processing delay overhead. A light protocol incurs low processing delay but only provides minimal functionality

2.7 IEEE/ISO standards

The Institute of Electrical and Electronic Engineers in the USA has been given the task of developing standards for local area networking under the auspices of the IEEE 802 LAN/MAN Standards Committee (LMSC). Some IEEE LAN/MAN standards (but not all) are also ratified by the International Organization for Standardization and published as an ISO-IEC standard with an '8' in front of the 802 designation. To date, the following LAN/MAN standards have been published by the ISO: ISO/IEC 8802.1, ISO/IEC 8802.2 ISO.IEC 8802.3, ISO/IEC 8802.5 and ISO/IEC 8802.11.

The LMSC assigns various topics to working groups and Technical Advisory Groups (TAGs). Some of them are listed below, but it must be kept in mind that it is an ever-changing situation.

2.7.1 IEEE 802.1 Bridging and Management

This sub-committee is concerned with issues such as high level interfaces, internetworking and addressing.

There are a series of sub-committees (thirteen at present), such as:

- 802.1B LAN/WAN management

- 802.1D MAC bridges

- 802.1E System Load Protocol

- 802.1F Common Definitions and Procedures for IEEE 802 Management Information

- 802.1G Remote MAC bridging

- 802.1X Port Based Network Access Control

2.7.2 IEEE 802.2 Logical Link Control

This is the interface between the Network layer and the specific network environments at the Physical layer. The IEEE has divided the Data Link layer in the OSI model into two sub-layers viz. the Media Access Control (MAC) sub-layer, and the Logical Link Control (LLC) sub-layer. The LLC protocol is common for most IEEE 802 standard network types. This provides a common interface to the network layer of the protocol stack. The IEEE 802.2 protocol used at this sub-layer is based on IBM's HDLC protocol, and can be used in three modes.

These are:

- Type 1: Unacknowledged connectionless link service

- Type 2: Connection oriented link service

- Type 3: Acknowledged connectionless link service, used in real time applications such as manufacturing control

2.7.3 IEEE 802.3 CSMA/CD

The Carrier Sense, Multiple Accesses with Collision Detection type LAN is commonly, but strictly speaking incorrectly, known as Ethernet. Ethernet actually refers to the original DEC/INTEL/XEROX product known as Version II (Bluebook) Ethernet.

Subsequent to ratification this system has been known as IEEE 802.3. IEEE 802.3 is virtually, but not entirely, identical to Bluebook Ethernet. The frame contents differ marginally and the following chapter will deal with this anomaly.

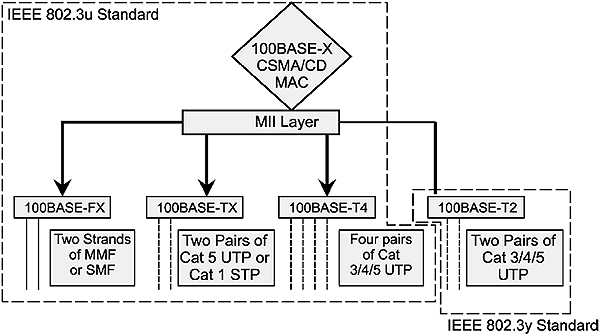

Subsequently, several additional specifications have been approved such as IEEE 802.3u (100 Mbps or Fast Ethernet), IEEE 8023z (1000 Mbps or Gigabit Ethernet) and IEEE 802.3ae (10000 Mbps or Ten Gigabit Ethernet).

2.7.4 IEEE 802.5 Token Ring

This standard is the ratified version of the original IBM Token Ring LAN. In IEEE 802.5, data transmission can only occur when a station holds a token. The logical structure of the network wiring is in the form of a ring, and each message must cycle through each station connected to the ring.

The original specification called for a single ring, which creates a problem if the ring gets broken. A subsequent enhancement of the specification, IEEE 802.5u, introduced the concept of a dual redundant ring, which enables the system to continue operating in case of a cable break.

The original IBM Token Ring specification supported speeds of 4 and 16 Mbps. IEEE 802.5 at first supported only 1 and 4 Mbps, but currently includes 100 Mbps and 1 Gbps versions.

The physical media for Token Ring can be unshielded twisted pair, shielded twisted pair, coaxial cable or optical fiber.

2.7.5 IEEE 802.11 Wireless LANs

The IEEE 802.11 Wireless LAN standard uses the 2.4 GHz band and allows operation to 1 or 2 Mbps. The 802.11b standard also uses the 2.4 GHz band, but allows operation at 11 Mbps. The IEEE 802.11a specification uses the 5.7 GHz band instead and allows operation at 54 Mbps. The IEEE 802.11g specification allows operation at 54 Mbps using the 2.4 Ghz band. The next generation of products (IEEE 802.11n) will support 100 Mbps.

2.7.6 IEEE 802.12 Demand Priority Access

This specification covers the system known as 100VG AnyLAN. Developed by Hewlett-Packard, this system operates on voice grade (Cat3) cable – hence the VG in the name. The AnyLAN indicates that the system can interface with both IEEE 802.3 and IEEE 802.5 networks (by means of a special speed adaptation bridge).

Other working groups and TAGs include:

- IEEE 802.15 Wireless PAN

- IEEE 802.16 Broadband wireless access

- IEEE 802.17 Resilient packet ring

- IEEE 802.18 Radio Regulatory TAG

- IEEE 802.19 Coexistence TAG

- IEEE 802.20 Mobile Broadband Wireless Access

- IEEE 802.21 Media Independent Handoff

- IEEE 802.22 Wireless Regional Area Network

There is no 'dot 13' working group. Many of the older working groups and TAGS (IEEE 802.4,6,7,8,9,10 and 14) have been disbanded while new ones are added as and when required.

2.8 Network topologies

2.8.1 Broadcast and point-to-point topologies

The way in which the nodes are connected to form a network is known as its topology. There are many topologies available but they can be categorized as either broadcast or point-to-point.

Broadcast topologies are those where the message ripples out from the transmitter to reach all nodes. There is no active regeneration of the signal by the nodes and so signal propagation is independent of the operation of the network electronics. This then limits the size of such networks.

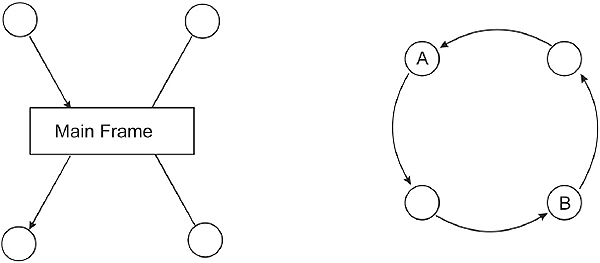

Figure 2.12 shows an example of a broadcast topology.

Broadcast topology

In a point-to-point communications network, however, each node is communicating directly with only one node. That node may actively regenerate the signal and pass it on to its nearest neighbor. Such networks have the capability of being made much larger. Figure 2.13 shows some examples of point-to-point topologies.

Point-to-point topologies

A logical topology defines how the elements in the network communicate with each other and how information is transmitted through a network. The different types of media access methods determine how a node gets to transmit information along the network. In a bus topology information is broadcast, and every node gets the same information within the amount of time it actually takes a signal to propagate down the entire length of cable. This time interval limits the maximum speed and size for the network. In a ring topology each node hears from exactly one node and talks to exactly one other node. Information is passed sequentially, in a predefined order. A polling or token mechanism is used to determine who has transmission rights, and a node can transmit only when it has this right.

A physical topology defines the wiring layout for a network. This specifies how the elements in the network are connected to each other electrically. This arrangement will determine what happens if a node on the network fails. Physical topologies fall into three main categories viz. bus, star, and ring. Combinations of these can be used to form hybrid topologies in order to overcome weaknesses or restrictions in one or other of these three component topologies.

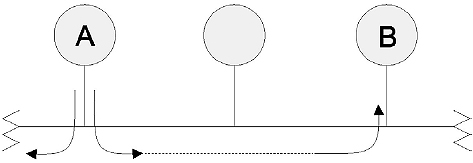

2.8.3 Bus topology

A bus refers to both a physical and a logical topology. As a physical topology a bus describes a network in which each node is connected to a common single communication channel or 'bus'. This bus is sometimes called a backbone, as it provides the spine for the network. Every node can hear each message packet as it goes past.

Logically, a passive bus is distinguished by the fact that packets are broadcast and every node gets the message at the same time. Transmitted packets travel in both directions along the bus, and need not go through the individual nodes, as in a point-to-point system. Instead, each node checks the destination address included in the frame header to determine whether that packet is intended for it or not. When the signal reaches the end of the bus, an electrical terminator absorbs the packet energy to keep it from reflecting back again along the bus cable, possibly interfering with other frames on the bus. Both ends of the bus must be terminated, so that signals are removed from the bus when they reach the end.

If the bus is too long, it may be necessary to boost the signal strength using some form of amplification, or repeater. The maximum length of the bus is primarily limited by signal attenuation issues. Figure 2.14 illustrates the bus topology.

Bus topology

Advantages of a bus topology

Bus topologies offer the following advantages:

- A bus uses relatively little cable compared to other topologies, and arguably has the simplest wiring arrangement

- Since nodes are connected by high impedance taps across a backbone cable, it is easy to add or remove nodes from a bus. This makes it easy to extend a bus topology

- Architectures based on this topology are simple and flexible

- The broadcasting of messages is advantageous for one-to-many data transmissions

Disadvantages of a bus topology

These include:

- There can be a security problem, since every node may see every message, even those that are not destined for it

- Troubleshooting can be difficult, since the fault can be anywhere along the bus

- There is no automatic acknowledgment of messages, since messages get absorbed at the end of the bus and do not return to the sender

- The bus can be a bottleneck when network traffic gets heavy. This is because nodes can spend much of their time trying to access the network

- In baseband systems a bus can only support half-duplex

2.8.4 Star topology

In aphysical star topology multiple nodes are connected to a central component, generally known as a hub. The hub of a star is often just a wiring center; i.e. a common termination point for the node cables. In some cases the hub may actually be a file server (a central computer that contains a centralized file and control system), with all the nodes attached to it with point-to-point links. When used as a wiring center a hub may, in turn, be connected to the file server or to another hub.

All frames going to and from each node must pass through the hub to which the node is connected. The telephone system is the best known example of a star topology, with lines to individual subscribers coming from a central telephone exchange location.

There are not many LAN implementations that use alogical star topology. The low impedance ARCNet networks are probably the best example. However, the physical layout of many other LANs physically resemble a star topology even though they are logically interconnected in a different way. An example of a star topology is shown in Figure 2.15.

Star topology

Advantages of a star topology

- Troubleshooting and fault isolation is easy

- It is easy to add or remove nodes, and to modify the cable layout

- Failure of a single node does not isolate any other node

- The inclusion of a central hub allows easier monitoring of traffic for management purposes

Disadvantages of a star topology

- If the hub fails, the entire network fails. Sometimes a backup central machine is included, to make it possible to deal with such a failure

- A star topology requires a lot of cable

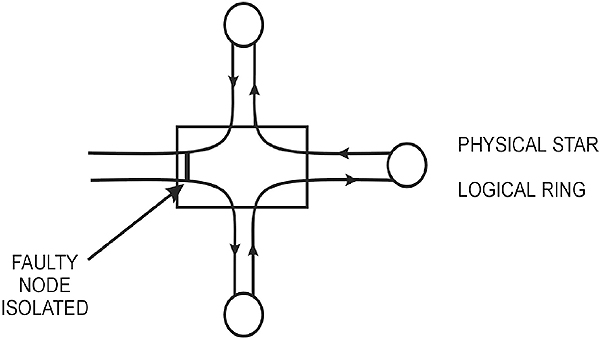

2.8.5 Ring topology

In a physical ring topology the frames are transmitted sequentially from node to node, in a predefined order. Nodes are arranged in a closed loop, so that the initiating node is the last one to receive a frame. As such it is an example of a point-to-point system.

Information traverses a one-way path, so that a node receives frames from exactly one node and transmits them to exactly one other node. A message frame travels around the ring until it returns to the node that originally sent it. Each node checks whether the message frame's destination node matches its address. When the frame reaches its destination, the destination node accepts the message and then sends it back to the sender in order to acknowledge receipt.